| Óêðà¿íñüêà | English | |||||||||||

|

|||||||||||

| News | About company | Service-centre | OB Van/SNG Rental | NextGen Energy Solutions | Contact us |

|

|

Engineering Service, Inc.

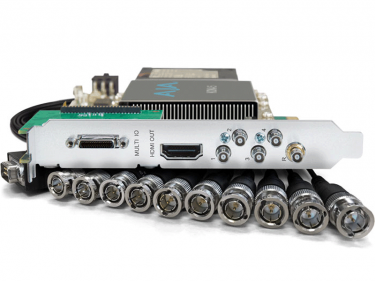

» News News AJA’s KONA 5 I/O card has been available for two years, and is the most recent in a long line of PCI expansion cards offering professional video interfaces for a variety of applications. While the card isn’t new, capabilities continue to be added to it through various software developments and firmware releases. The most recent of which is Adobe’s support of the KONA 5 as one of the few ways to monitor HDR content in Premiere Pro, over either SDI or HDMI. With this update, many Premiere editors are going to have the opportunity to edit and view HDR content directly from their timelines on HDR displays for the first time. This will require an understanding on various new technologies and settings to get the best results for HDR workflows, which I intend to cover as thoroughly as possible here, as I work my way through covering the KONA card’s options and settings.

HDR stands for High Dynamic Range, and has been the big buzzword in televisions for a number of years now, but there has been limited content available to highlight the impressive visuals that this technology offers. This is not due to a lack of matching camera technology, which has been available longer than the displays have, but due to all of the workflow steps in between. And these intermediate steps have taken longer to develop and mature, because increasing the dynamic range of electronic images, while maintaining a consistent viewing experience for viewers in different mediums, is much harder than increasing the resolution. Increasing the resolution of a display system requires increasing the sampling frequency and bandwidth, and shrinking the individual pixel components, but not much else. And Moore’s law has made increasing resolution relatively easy as everything made of silicon continues to shrink and operate more efficiently. Increasing the dynamic range of an image requires more sensitive camera sensors, and brighter displays, that also have the capability to dim much darker for higher contrast. These both are hardware developments that have been available for a while, but unlike increasing resolution, which just requires more processing power, wider color spaces require bigger changes to software, and the development of methods to convert between the available color spaces. This is further complicated by the fact that increasing the brightness of a displayed color increases its perceived saturation, but that this effect impacts different color wavelengths in varying degrees, leading to shifts in certain hues when brightness is increased. These perceptual differences, as well as a few others, need to be compensated for when converting images between high and standard dynamic range formats.

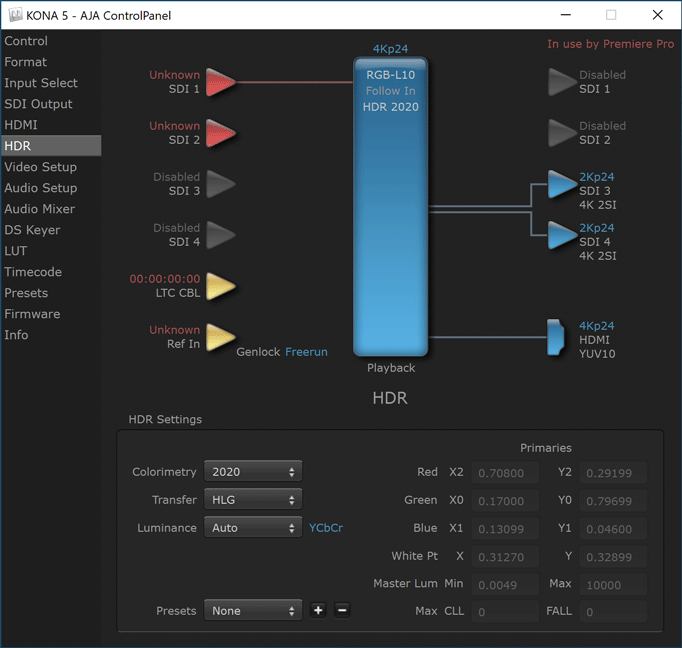

PQ is a nonlinear electro-optical transfer function that allows for the display of HDR content with brightness values up to 10K nits using the Rec.2020 color-space. PQ was codified as SMTPE 2084 and is the underlying basis for the “HDR10” standard. HDR10 is an open television standard that combines the Rec.2020 color-space, 10bit color, and the PQ transfer function, with other frame and scene metadata related to average and maximum light levels. An HDR10 display uses this metadata, combined with info on its own unique hardware brightness levels, to interpret the incoming image signal in such a way that maximizes the dynamic range of the displayed image, while remaining consistent to viewers across different types of displays. HLG is also a nonlinear electro-optical transfer function, but a simpler one that uses the standard gamma curve for the darker half of the spectrum, and switches to a logarithmic curve for the brighter side of the spectrum. This allows HLG content to be viewed directly on SDR monitors, with a slight change in how the highlights look, with increased detail at the cost of slightly lower maximum brightness. But on an HLG aware HDR display, the brighter portions of the image have their brightness values logarithmically stretched to take advantage of the display’s greater dynamic range. Also, greater dynamic range means a greater visual difference between dark and light areas of the spectrum, with more values in between. Digitally reproducing that greater range of values requires greater bit depth. 8bit gamma encoded images can reproduce a range of about 6 stops without visible banding. Trying to display an 8bit image with higher contrast than that, results in visible steps between the individual colors, usually in the form of bands around bright points on the screen. Increasing the bit depth to 10 bit gives 4 times as many levels per channel, and 64 times as many possible colors, extending that range to about 10 stops. Using a more complex transfer function, those same 10 bits can be used to display over 17 stops of range without visible banding, if the display is bright enough. So all HDR content is stored and processed with at least 10 bits of color depth, regardless of whether it is PQ or HLG. HDR content can be transmitted over HDMI connections, or SDI cables. HDMI 2.0 allowed 4Kp60 over a single cable. The 2.0a version added support for HDR video in the form of PQ based HDR10. The 2.0b version added support for HLG based HDR content, and the KONA 5 supports both of these options from its HDMI 2.0b port. Users can choose between RGB and YUV output, and 8, 10, or 12-bit color output. I have been testing primarily at YUV-10 HLG, at 4Kp24, because that is what most of my source footage is. The KONA card has four bi-directional 12G SDI ports, supporting HD frame-sizes to 8Kp60 with Quad 12G output. 12G-SDI has the bandwidth to send a 4Kp24 RGB signal over a single cable, or it can be divided across four 3G-SDI channels. When using a single 12G-SDI cable to output 4K, the KONA 5 can also output a separate 2K down-converted 3G signal on the other SDI monitoring output, allowing the use of older HD displays. When using Quad-channel SDI, two different approaches can be taken to dividing the data between the channels. The first is to break the frame into quadrants, and send each zone on a separate cable. The second is 2-Sample interleave (2SI), which alternates streams every two pixels, resulting in each cable carrying a viewable quarter resolution image. Regardless of which approach is taken, a 4K signal is divided into four 3G HD streams, and 8K is divided into four 12G 4K streams. The AJA control panel allows user control over these modes in the SDI Output section when needed. My monitor allowed me to successfully test the KONA 5’s HDMI output, Quad 3G-SDI output, and 12G-SDI output at 4K, as well as Quad 12G output at 8K.

AJA also includes a media capture and playback application with their software package called AJA Control Room. It offers a number of file and format options, and now fully supports HDR workflows in both PQ and HLG. Since Control Room operates the KONA card in YUV mode, it supports 8K playback up to 60fps, over quad 12G SDI, which my display is able to take and display. I was able to get 8K output to display from Premiere Pro, but it dropped frames during ProRes playback, even at 24p. Using AJA’s Control Room software, I was able to get smooth 8K playback of ProRes HDR files at both 24p and 60p, which is quite an impressive accomplishment on my older generation workstation. I plan to run more tests on a newer system in the near future, to see if Premiere can perform better with newer hardware.

Working in HDR requires using files that support at least 10-bit color, and HDR color spaces. The most popular format will likely be Apple’s ProRes, which supports both PQ and HLG content. Other options include Sony’s X-AVC format for 10-bit HLG content, and JPEG2000 in 12-bit PQ format. Both H.264 and HEVC have the possibility of storing HDR content in either form, depending on the encoder. Adobe currently only supports encoding those two formats to PQ as HDR10 in Media Encoder. But other options should continue to become available in the future. I expect HLG to be more popular as a distribution format, especially in the short term, due to its inherent compatibility with a wider array of playback devices. « To the list of news |

|

|||||||||||||||||

|

+38 (044) 593-18-20 +38 (073) 593-18-20 +38 (096) 532-96-82 +38 (095) 532-96-82 Service center Telegram @Engineer_Service |

|

|

|||||

|

e-mail: engineer-service.tv 15 Vavylovykh str., Kiev, 04060, Ukraine Authorized service centre of Panasonic, Sony, JVC, Fujinon, Canon |

|||||||

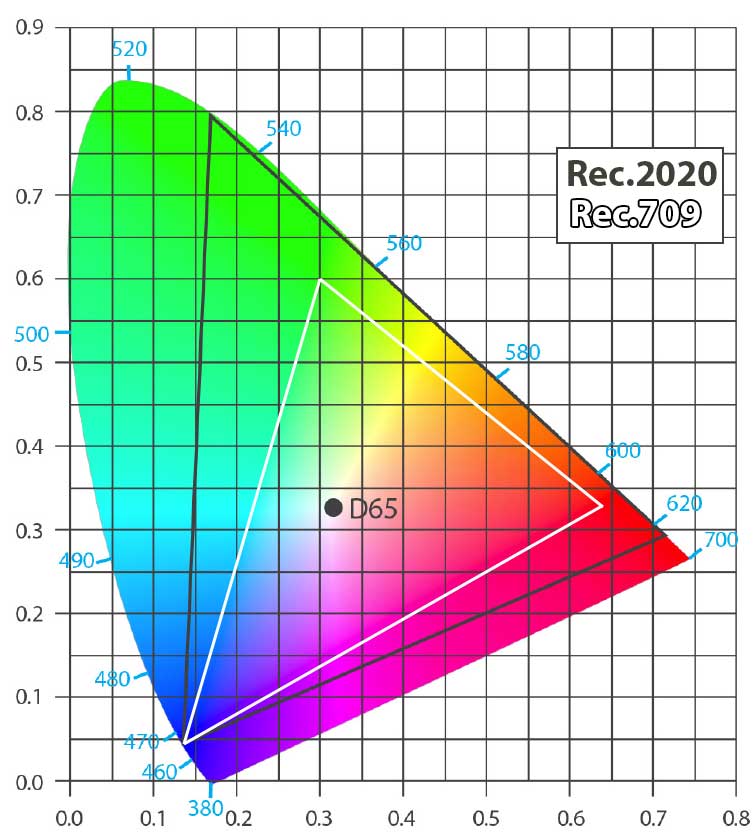

Technically the jump from SD (Standard definition) to HD (high definition) involved the switch from the Rec.601 color-space standard to the Rec.709 color-space standard, which is such a small change that most people even working in that business couldn’t even tell the difference. The jump from HD to UHD (Ultra-high definition) or “4K” was supposed to involve a change to the Rec.2020 color-space, which offers a noticeably wider potential palette of colors, but in practice, much 4K content was edited and finished at Rec.709 and converted to Rec.2020 in a final step before display, not taking advantage of the newer capabilities. Rec.2100 is the next step, laying out the color-space standards for HDR content in HD, UHD and even 8K. This new color space has the same primary color values as Rec.2020, but allows a much wider value of brightness possibilities. And Rec.2100 includes two totally separate approaches to how this can be done, with the mutually exclusive optical transfer functions of perceptual quantization (PQ) or Hybrid Log Gamma (HLG).

Technically the jump from SD (Standard definition) to HD (high definition) involved the switch from the Rec.601 color-space standard to the Rec.709 color-space standard, which is such a small change that most people even working in that business couldn’t even tell the difference. The jump from HD to UHD (Ultra-high definition) or “4K” was supposed to involve a change to the Rec.2020 color-space, which offers a noticeably wider potential palette of colors, but in practice, much 4K content was edited and finished at Rec.709 and converted to Rec.2020 in a final step before display, not taking advantage of the newer capabilities. Rec.2100 is the next step, laying out the color-space standards for HDR content in HD, UHD and even 8K. This new color space has the same primary color values as Rec.2020, but allows a much wider value of brightness possibilities. And Rec.2100 includes two totally separate approaches to how this can be done, with the mutually exclusive optical transfer functions of perceptual quantization (PQ) or Hybrid Log Gamma (HLG). In the HDR section of the AJA ControlPanel, users can set the Colorimetry to: SDR (Rec.709), Rec.2020, P3, or custom settings.

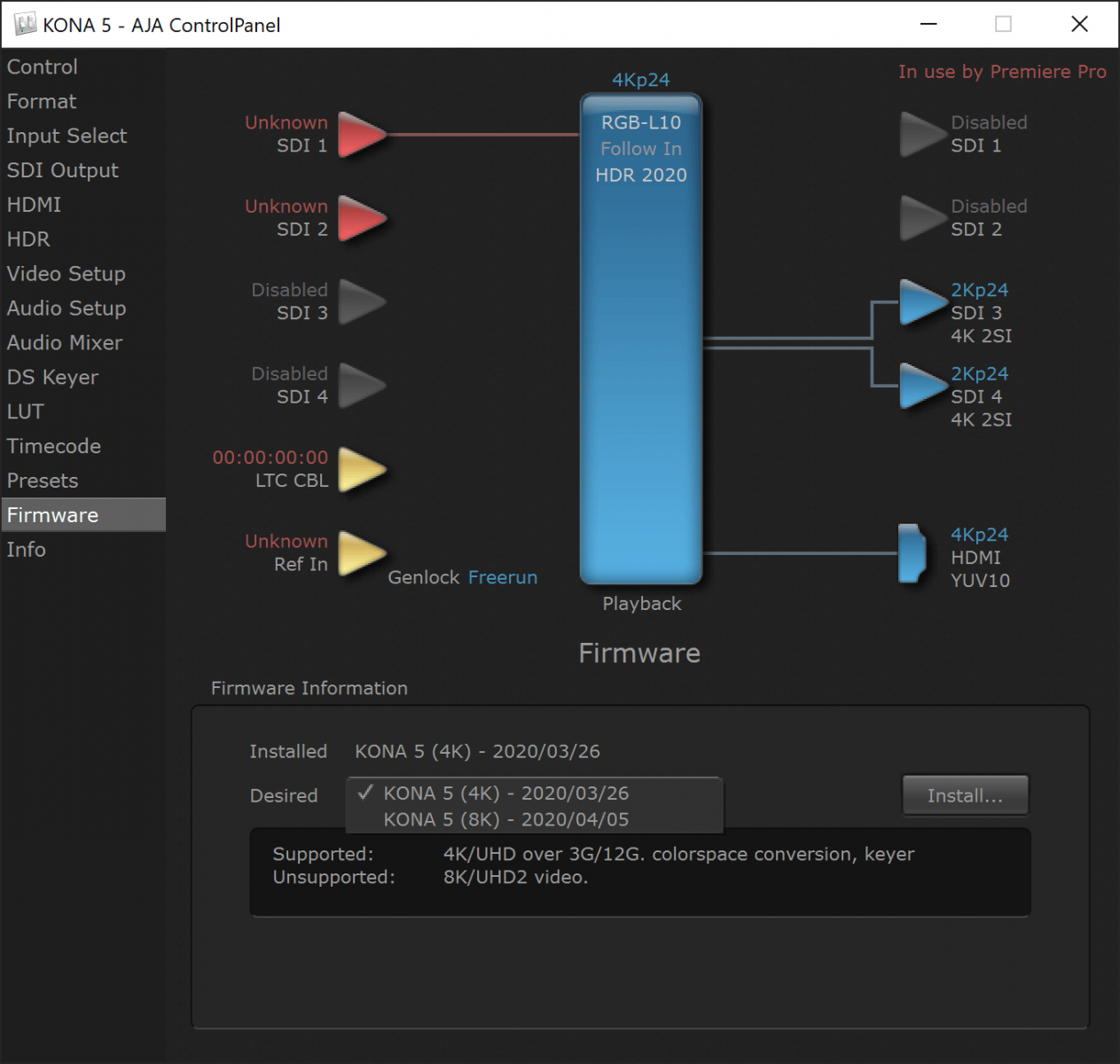

In the HDR section of the AJA ControlPanel, users can set the Colorimetry to: SDR (Rec.709), Rec.2020, P3, or custom settings. 8K output from the KONA 5 card involves a separate firmware or bit file install, which replaces the 4K color-space conversion and keying functionality with 8K image processing.

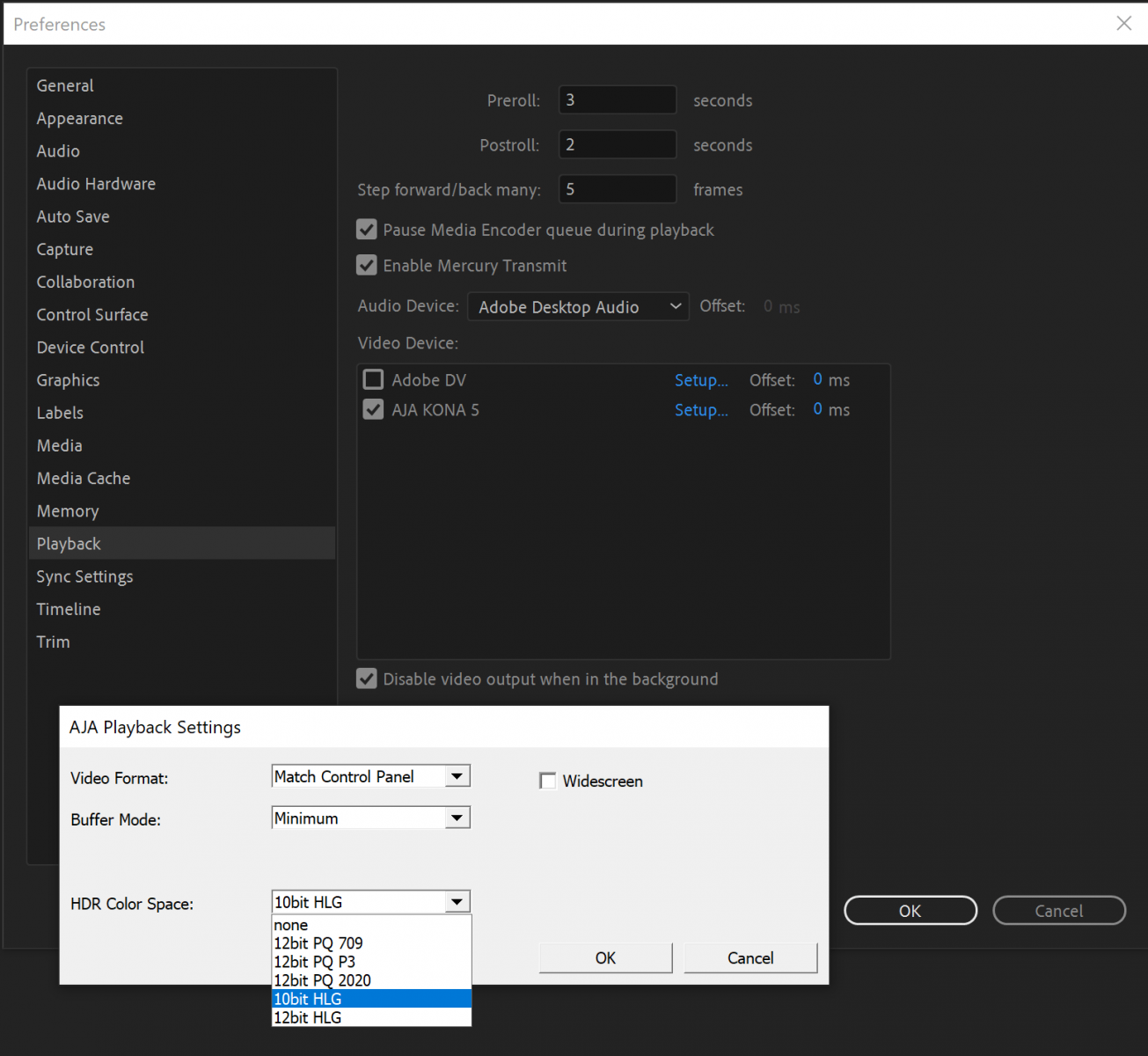

8K output from the KONA 5 card involves a separate firmware or bit file install, which replaces the 4K color-space conversion and keying functionality with 8K image processing. Currently, in order to monitor 50p or 60p HDR sequences in Premiere Pro over SDI, the output must be set to 10-bit HLG.

Currently, in order to monitor 50p or 60p HDR sequences in Premiere Pro over SDI, the output must be set to 10-bit HLG.